Hadoop Framework

Hadoop is an apache open source framework which of course, like most software is written in java. It enables distributed processing of large data-sets across several systems simple programming models. A Hadoop frame- worked application operates in an atmosphere that offers distributed storage and computation across several computers. The open source framework is crafted to scale up from a single system to several machines offering local computation and storage.

Architecture of Hadoop

Hadoop framework consists of four modules that include:

Hadoop common

They are Java libraries and functions that are used by various Hadoop modules.

Hadoop distributed file system

It is a distributed file system that offers high throughout access to application data.

Hadoop Yarn

It is a framework for scheduling jobs and clustering of resource management.

Hadoop Map Reduce

A Yarn based system for processing of large data sets.

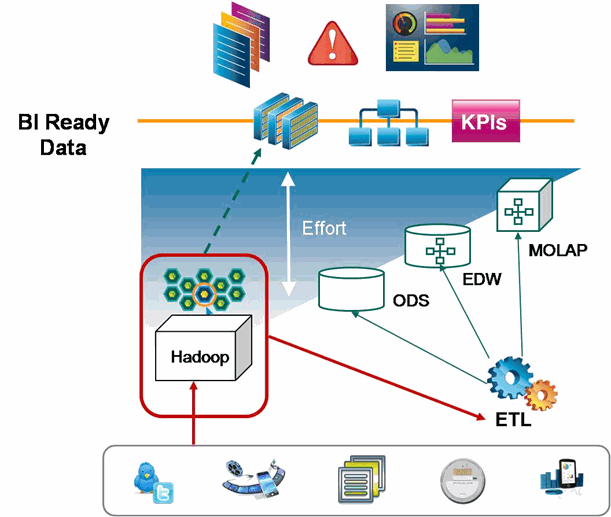

How are industries or businesses using Hadoop?

Apart from supporting real time, Hadoop lends a hand to all kinds of computation that are iterative, advantages from parallel processing and batch oriented. Businesses used Hadoop to create difficult analytics models or high volume data storage applications like

- Risk Modelling

- Retrospective and predictive analytics

- Machine learning and pattern matching

- ETL pipelines

What is so special about Hadoop? What makes it so different from the others?

There are a few industries that have delayed data chances as there are industrial constraints and there are those who are not sure what distribution to choose and there are those who don’t have the time to mature their big data delivery as the businesses do have a lot of pressure in meeting their everyday needs.

Hadoop adapters will not leave any chance on the table. It is non-negotiable for them to pursue fresh revenue chances, overcome the competition and make their customers happy with better, quicker analytics and data applications. The most effective Hadoop plans begin with selecting recommended distributions, then maturing the environment with the latest hybrid architectures and adopting a data lake strategy based on the technology.

Hadoop is by far one of the most effective and safe open source framework that every organisation believes in taking advantage off when it comes to getting work done.